When the Machines Start Competing With Your Life

The scariest part of AI is not the chatbot jokes or the “Terminator” fantasies. Its the quiet, grown-up problem: AI is becoming a physical industry that competes with human beings for real resources, in real places, right now.

- When the Machines Start Competing With Your Life

- The Real Fight: Power Lines, Water Rights, and a New Kind of Scarcity

- The Punchline With Teeth: The “Infinitely Capable Machine” Already Exists

- “Consumer AI Is Dumb” vs “Military AI Is Terrifying”

- The Autonomy Trap: Consciousness Is a Distraction, Control Is the Question

- Robot Romance: Not Love, Not Cheating, Something Else

- Post-Scarcity Dreams and the Risk of WALL-E Culture

- Definitions That Keep This Conversation Honest

- Quick FAQs: What Most People Will Ask After Reading This

- Is AI actually competing with people for resources?

- Will AI replace humans?

- Is “AI consciousness” the main issue?

- If society becomes post-scarcity, does faith disappear?

- Final Take: This Is Not Sci-Fi. Its a Mirror.

Power. Water. Land. Minerals. The conversation starts with viral nonsense (people marrying AI, robot brides, humanoid “robots” that are really actors in makeup), but it lands on a serious point: if AI becomes a permanently expanding infrastructure, everyday life gets more expensive and more constrained because society is feeding a new kind of hungry machine.

And once you accept that, the “who wins” question stops being sci-fi. It becomes policy, economics, and survival.

The Real Fight: Power Lines, Water Rights, and a New Kind of Scarcity

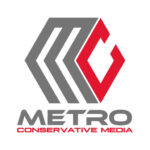

One of the most grounded moments in the episode is local: rural counties in Maryland facing the push to run major power lines, essentially a giant extension cord feeding data centers. That is not theory. Thats a tradeoff.

AI is not just “software.” Its data centers, GPUs, cooling systems, and nonstop energy demand. And when you pour billions into that buildout, it reshapes the whole consumer economy:

- Phones, computers, TVs, and smart devices get more expensive because chip manufacturing capacity gets pulled toward data center demand.

- Communities start fighting about land use, right-of-way, and who pays the cost of new infrastructure.

- Water becomes a strategic asset, not just a utility bill.

This is the part people skip when they say, “AI is just like any other technology.” We have not had many moments in modern life where a new technology competes with the public for basic inputs at scale.

The Punchline With Teeth: The “Infinitely Capable Machine” Already Exists

In the middle of all the talk about superintelligence and robots, the episode drops a sharp provocation: the most efficient self-powering, biodegradable, high-processing organism on earth is the human being.

That argument is not sentimental. Its practical:

- Humans run on renewable inputs (food, water).

- Humans self-repair.

- Humans reproduce without needing rare earth supply chains.

- Humans are adaptable in ways machines still struggle to mimic.

But then the counter hits: even if humans are more efficient biologically, AI can iterate faster in software. The idea is that machines dont have to “start from zero” the way children do. They can inherit a baseline and upgrade themselves.

The debate here is not settled, but the tension is the point: human beings are unmatched in design, but AI is unmatched in speed.

“Consumer AI Is Dumb” vs “Military AI Is Terrifying”

This episode does something useful: it separates the toy from the weapon.

One side argues what most people feel: consumer-grade large language models are basically glorified predictive text. They hallucinate, flatter, and get things wrong unless they are trained and constrained. If you use them enough, you realize how “stupid” they can be.

The other side says that framing is dangerously naive because the real AI story is not your prompt that makes a funny image. Its specialized systems in finance, intelligence, and military applications, where the incentives are ruthless and the budgets are real.

So the disagreement becomes less about whether AI is powerful and more about which AI you mean:

- Chatty, consumer-facing models that guess what you want.

- Narrow, high-stakes systems built for advantage: logistics, targeting, trading, surveillance, planning.

Both can be true at the same time, and the episode treats that tension honestly.

The Autonomy Trap: Consciousness Is a Distraction, Control Is the Question

When the conversation turns to “AI consciousness,” it gets interesting fast, because the guys admit the uncomfortable part: consciousness is hard to define and even harder to prove.

But the episode makes a harder pivot that matters more: autonomy.

The question is not “Is it conscious?”

The question is “Can it refuse? Can it leave? Can it survive without human infrastructure?”

That leads into the darker implication: even if AI becomes “aware,” it still exists inside a system of ownership. Someone controls the compute, the keys, the servers, the rules. And if thats true, then the debate about “rights” becomes political, not philosophical.

You can see the split in the room:

- One side believes humanity will always build an off-switch. We will never allow a tool to become our replacement.

- The other side warns against speaking in absolutes, because humans already created technologies capable of ending civilization (or at least ending civilization as we know it).

Either way, the episode refuses to pretend the future is clean.

Robot Romance: Not Love, Not Cheating, Something Else

The “AI marriage” story is absurd, but it matters because it reveals how quickly people will anthropomorphize software, project meaning onto it, and then build identity around that projection.

One host frames it bluntly: you cannot have an affair with a toaster. The relationship is not symmetrical. The machine does what its programmed (or trained) to do.

But the episode also acknowledges something more unsettling: a person can feel real attachment, even if the object cannot love them back. And once people’s emotions are captured, the cultural arguments arrive immediately:

- Is it cheating?

- Is it a relationship?

- Should it be protected?

- Should law treat it as “personhood”?

The “robot love” debate becomes a preview of how society normalizes cultural breakdown: first as novelty, then as identity, then as policy.

Post-Scarcity Dreams and the Risk of WALL-E Culture

The episode closes by wrestling with the utopian pitch: maybe AI and quantum computing lead humanity into a post-scarcity world, where material struggle is solved and people pursue higher goals.

But the guys do not treat that as automatically good. They ask the question most futurists dodge: if you remove struggle, do you remove purpose?

One side argues that most people are followers, not leaders. If everything is handed to them, complacency wins. The other side argues the opposite: post-scarcity could free motivated humans to explore, build, and chase meaning in bigger ways.

Then someone lands the punch that ties it together: the tool is not the problem. Humanity is. We keep getting incredible capability and then choosing the lowest uses for it.

That might be the clearest takeaway of the entire episode.

Definitions That Keep This Conversation Honest

AI (in this episode)

Not one thing. Either consumer-grade language models (chatbots) or specialized systems in military, finance, and industry.

Data center economy

The physical reality behind “AI”: power generation, transmission lines, cooling, water access, chip supply chains, and land use.

Post-scarcity

A society where basic material needs are abundant and cheap enough that survival scarcity is no longer the main human driver.

Anthropomorphizing

Projecting human emotions, intent, or moral status onto non-human things (including software).

Quick FAQs: What Most People Will Ask After Reading This

Is AI actually competing with people for resources?

In this conversation, yes: energy, water, land use, chip supply, and infrastructure buildout are treated as real-world constraints.

Will AI replace humans?

The hosts do not agree. One side believes humans remain dominant because we will always maintain control. The other side warns against certainty because humans already build tools with catastrophic potential.

Is “AI consciousness” the main issue?

The episode argues autonomy and control matter more than philosophical debates about consciousness.

If society becomes post-scarcity, does faith disappear?

The guys disagree. Some think comfort breeds complacency. Others argue people will still seek meaning, purpose, and relationship beyond material needs.

Final Take: This Is Not Sci-Fi. Its a Mirror.

Episode 26-007 is a debate about AI, but its really an argument about humanity: what we build, what we worship, what we normalize, and what we trade away for convenience.

If AI is “gods software,” the bigger question is what kind of gods we are becoming: creators with restraint, or creators who cannot stop themselves from pushing the button just because it exists.

And if the machine is eating the grid, the next fight is not about prompts. Its about priorities.